More by Austin Parker, Principal Developer Advocate at Lightstep:

| Author: Austin Parker, Principal Developer Advocate at Lightstep

Avg. reading time: 4 minutes

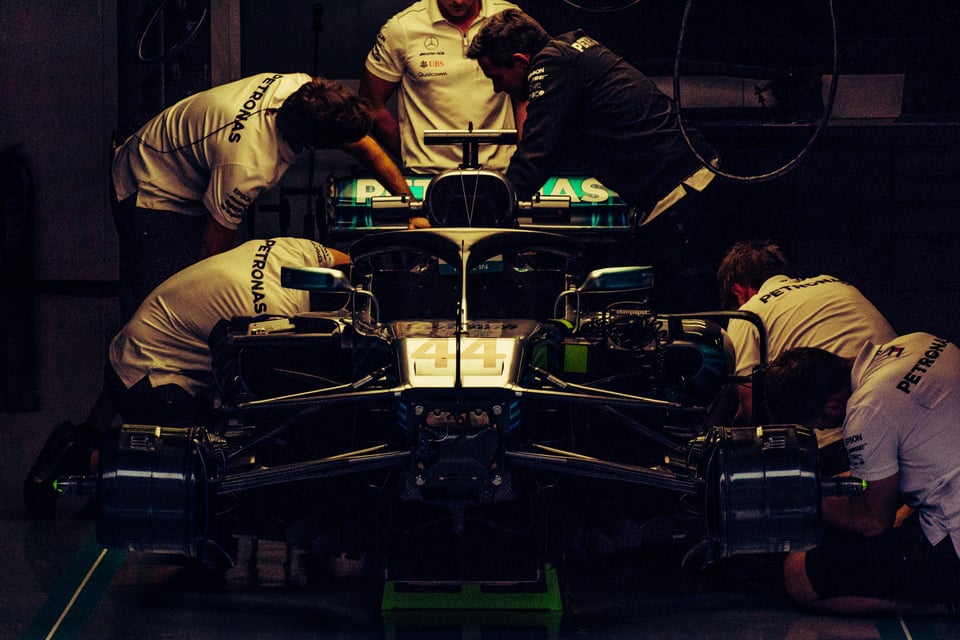

The steering wheel of a Formula 1 vehicle is a terrifyingly complex piece of custom-built machinery. It must withstand speeds of hundreds of miles per hour, up to 6g’s of force in turns, and tens of thousands of individual interactions per race. It exposes critical information about the performance and state of its vehicle in such a way that a driver can adjust everything from the mix of oil and gasoline in the engine, to the amount of electricity being recovered by an alternator, to the amount of braking power available, and even the specific rotation of individual wheels for cornering. These operations have to be performed unfailingly, unflinchingly, for hours on end. Failure to correctly interpret a signal or input a desired state isn’t just the difference between a win and a loss -- critical failures of these systems can result in fines, or the loss of a multi-million dollar vehicle due to wrecks and crashes.

The design, iteration, and improvement of these steering wheels is a continuous process for each season -- manufacturers, drivers, and designers working in concert to improve the feedback available to the driver in-the-moment. As the systems they control become more complex, the feedback mechanisms must adapt and evolve. The work of building the system to monitor and control the system consumes a lot of time and effort, but without this work, the system cannot be understood or operated safely.

If this sounds familiar to you, and you aren’t a race car driver, it may be because you’ve built developer platforms for observability, performance monitoring, CI/CD, and other ends. A natural consequence of the complexity of cloud-native systems is that they lend themselves to a required level of introspection to make sense of their abstractions. When our applications and systems are small, we are able to perform this introspection strictly via the outputs of the system. You don’t necessarily need complex logging or monitoring abstractions to keep the state of the system in your head, you can probably fit it on a whiteboard, and there’s a countable number of messages that you can piece together in order to validate these assumptions.

As systems grow, the requirements for modeling and sense-making also grow. You can’t keep the whole thing in your head any more, and you can’t represent the components and their relationships in a two-dimensional way as easily. The whiteboard won’t cut it. System growth also brings in new audiences to how these models are communicated. It becomes less important to communicate the technical interconnectedness of the system, and more important to communicate about the business value the system drives. This cycle begins to repeat itself, as more and more probes and monitors are placed in the system to track key indicators of system health and performance. Complexity breeds complexity.

In response to this, many engineers will opt to ‘ship their expertise’ and build Formula 1 steering wheels of their own. It stands to reason that the driver doesn’t necessarily need to understand the details about the system, or the particular ratios of fuel to oil to air, or any of the other low-level details -- they just need some preset options that they can shift between. Similarly, SRE teams and senior engineers will build dashboards, queries, alerts, and metrics from their services and hand them off to the on-call engineers, or opt for training these engineers in how to explore and query the mass of telemetry emitted from their services in order to discover answers on their own. They build meta-monitoring tools -- or rely on synthetic checks of predefined routes and workflows -- to communicate service health and performance to a wider audience. As the system grows, new reliability measures are constructed and channeled to users, executives, engineers, and others. Who hasn’t bemoaned the state of the world, when we spend more time and effort on the work of doing work, than the work itself?

Contrast, briefly, the Formula 1 steering wheel with the dashboard of a modern passenger vehicle. The purpose of the vehicle is to move you from point A to point B -- a reductionist take, yes, but bear with me. Operators of a minivan and a Formula 1 car both have similar needs, which is the minimal set of data required to safely accomplish this goal. This requires them to have state and status information about their system, and levers to manipulate that state. The distinction is the degree to which each operator can manipulate the system on a reactive basis, and the level of detail available to those manipulations.

I believe that we, as software engineers, prefer to imagine ourselves as the race car driver. When we get paged for an outage or poor performance, our desired state is to have the maximal amount of information available, and the maximal amount of levers to manipulate the underlying system state, in order to correct performance anomalies or otherwise restore service health. I think this ignores a very real fact, which is that most of us didn’t sign up for that level of control -- and it flies in the face of the goal of platform engineering and developer tooling, which is to make things easier rather than harder. In my mind, it’s difficult to square the circle of ‘be more empathetic about on-call’ with monitoring practices that have metaphorical ‘you must be this tall to ride’ signs everywhere. It’s true that we want high performing teams, but we shouldn’t gate that desire on requiring extremely high levels of systemic expertise and sophistication at the individual level. Furthermore, these requirements act as a cap on our ability to distill performance data into reasonable approximations of health to the unsophisticated, external observer. This leads to status pages that are driven by marketing fiat rather than data, and overly rosy internal communications about team and system health.

My solution to this? Think of your system like a minivan, rather than a Formula 1 car. Operators need data and health measurements that are connected to real-world customer experiences, not arbitrary internal performance metrics. Teams should orient themselves around observability primitives like the SLO, and use that as a primary measure of communicating service health and reliability. Rather than continuously iterating on query and dashboard design, challenge ourselves to create guided and opinionated workflows that work in concert with SLOs to highlight and guide exploration and debugging. Focus on aggregatable, repeatable, and high-quality telemetry through OpenTelemetry and build it into our systems from Day Zero.

Our systems and the operation of them shouldn’t be like bolting yourself into a “ground-based fighter jet” -- it’s fun to imagine, but it’s not fun in practice for the people on the sharp edge. Let’s build more minivans, and enjoy the ride.

To learn more check out this O'Reilly's report: The Future of Observability with OpenTelemetry written by Ted Young.

Register for SLOconf and listen to Austins talk at sloconf.com

Image Credit: Kévin et Laurianne Langlais on unsplash

.png?width=1200&height=628&name=Building%20Reliable%20E-commerce%20Experiences%20(24).png)

.png?width=1200&height=628&name=Building%20Reliable%20E-commerce%20Experiences%20(22).png)

.png?width=1200&height=628&name=Building%20Reliable%20E-commerce%20Experiences%20(19).png)

Do you want to add something? Leave a comment